An Efficient CKKS-FHEW/TFHE Hybrid Encrypted Inference Framework

International Workshop on Privacy Security and Trustworthy AI (PriST-AI 2023), a workshop of ESORICS 2023

作者

Tzu-Li Liu, Yu-Te Ku, Ming-Chien Ho (Beth), Feng-Hao Liu, Ming-Ching Chang, Chih-Fan Hsu, Wei-Chao Chen, and Shih-Hao Hung

發表日期

2023/9/29

概要

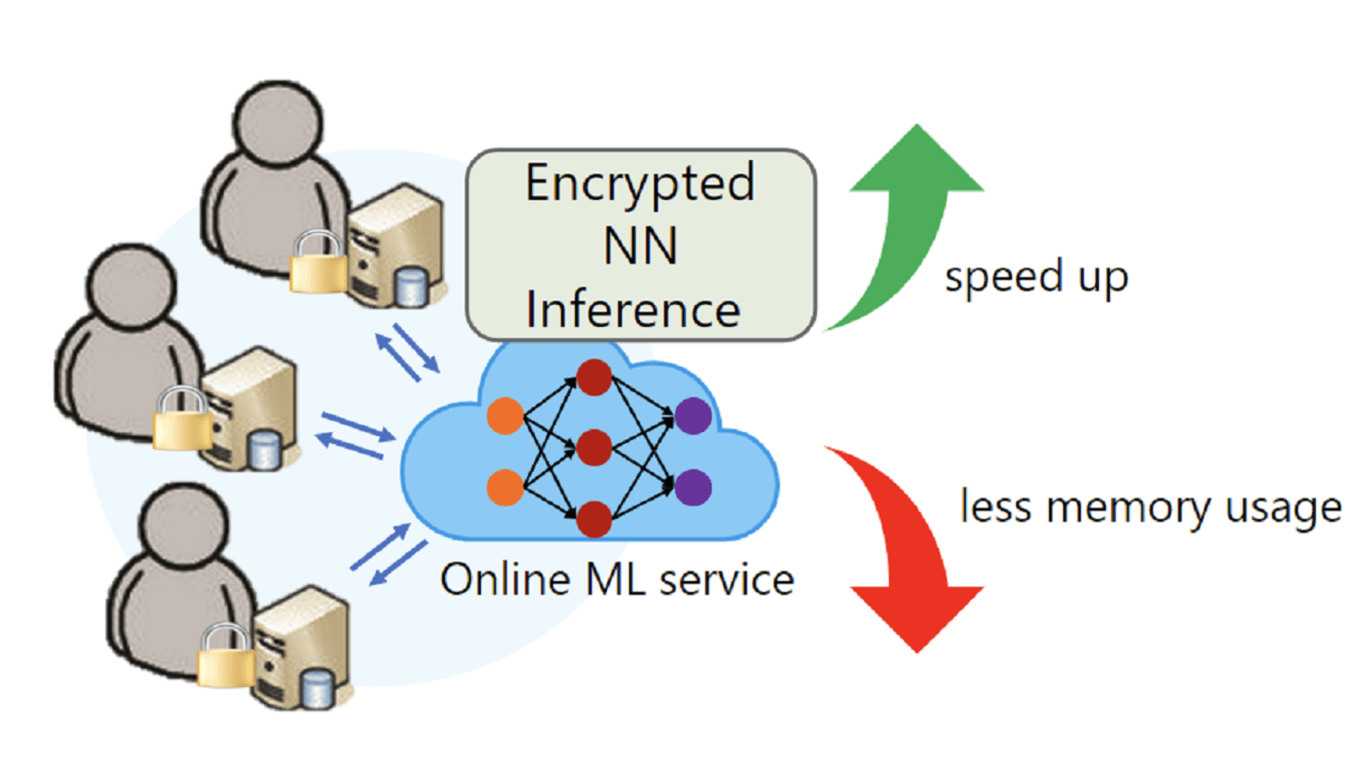

Machine Learning as a Service (MLaaS) is a robust platform that offers various emerging applications. Despite great convenience, user privacy has become a paramount concern, as user data may be shared or stored in outsourced environments. To address this, fully homomorphic encryption (FHE) presents a viable solution, yet the practical realization of this theoretical approach has remained a significant challenge, requiring specific optimization techniques tailored to different applications.

We aim to investigate the opportunity to apply the CKKS-FHEW/TFHE hybrid approach to NNs, which inherit the advantages of both approaches. This idea has been implemented in several conventional ML approaches (PEGASUS system presented in IEEE S&P 2021), such as decision tree evaluation and K-means clustering, and demonstrated notable efficiency in specific applications. However, its effectiveness for NNs remains unknown.

In this paper, we show that directly applying the PEGASUS system on encrypted NN inference would result in a significant accuracy drop, approximately 10% compared to plaintext inference. After a careful analysis, we propose a novel LUT-aware fine-tuning method to slightly adjust the NN weights and the functional bootstrapping for the ReLU function to mitigate the error accumulation throughout the NN computation. We show that by appropriately fine-tuning the model, we can largely reduce the accuracy drop, from 7.5% to 15% compared to the baseline implementation without fine-tuning, while maintaining comparable efficiency with extensive experiments.

關鍵字

- Homomorphic encryption

- Privacy-preserving machine learning

- Neural network

- Functional bootstrapping