MERIT: Tensor transform for memory-efficient vision processing on parallel architectures

IEEE Transactions on Very Large Scale Integration Systems 2019

作者

Yu-Sheng Lin, Wei-Chao Chen, Shao-Yi Chien

发表日期

Nov-19

概要

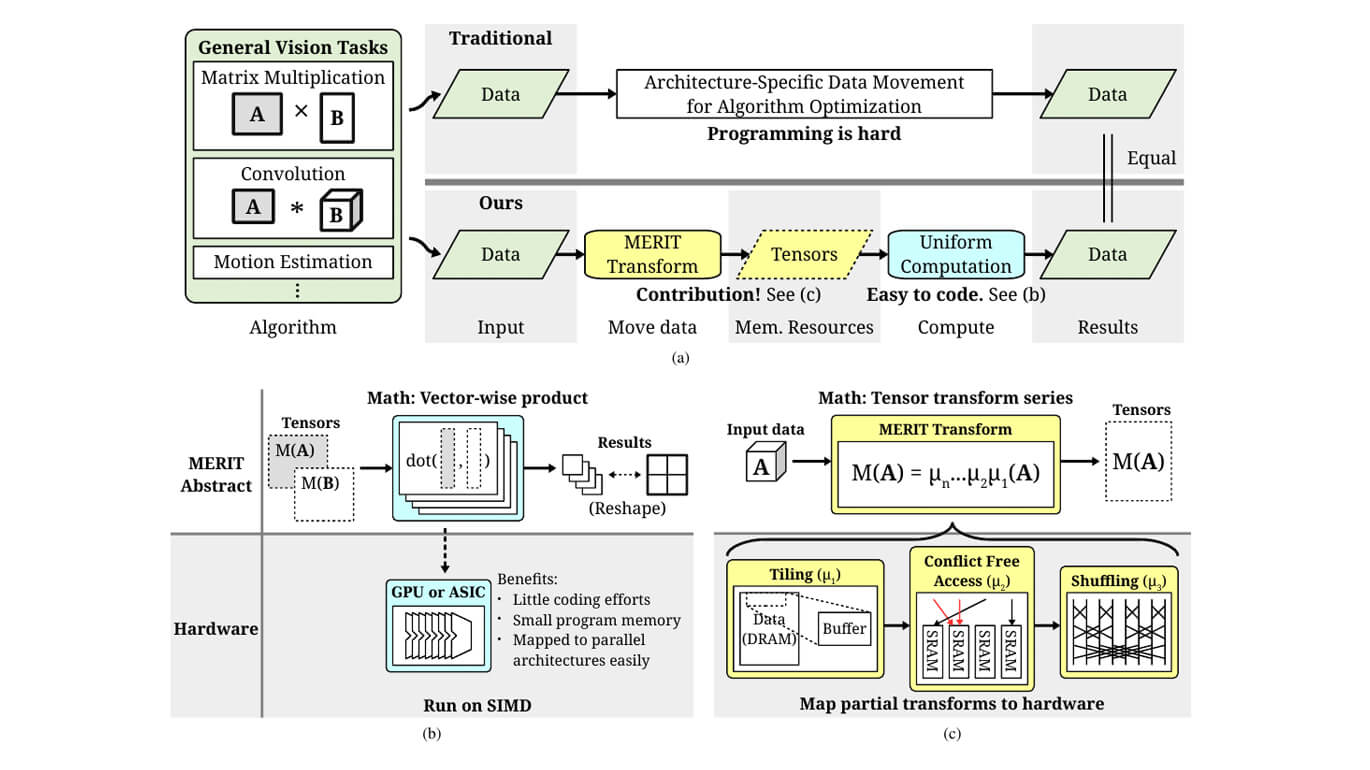

We propose a mathematical formulation which can be useful for transferring the parallel algorithm optimization knowledge across computing platforms. We discover that data movement and storage inside parallel processor architectures can be viewed as tensor transforms across memory hierarchies, making it possible to describe many memory optimization techniques mathematically. Such transform, which we call Memory Efficient Ranged Inner-Product Tensor (MERIT) transform, can be applied to not only DNN tasks but also many traditional machine learning and computer vision computations.

We also demonstrate that many popular applications can be converted to a succinct MERIT notation on GPUs, speeding up GPU kernels up to 20 times while using only half as many code tokens. We also use the principle of the proposed transform to design a specialized hardware unit called MERIT-z processor. This processor can be applied to a variety of DNN tasks as well as other computer vision tasks while providing comparable area and power efficiency to dedicated DNN ASICs.

关键字

- DNN Accelerator